Spatial on Saturdays No. 6

A quick intro to generative AI, GPUs, and the future of Geospatial x AI.

By the numbers

258,465

AI models listed on HuggingFace

161.58%

Increase in the stock price of Nvidia in the last 6 months

100+

Terabytes of imagery generated every day

Generative AI in geospatial

Generative AI is still booming, and geospatial is starting to catch on. Between photo editors, Chat GPT, and more, the generative AI explosion this year has not slowed down. With new companies and multiple acquisitions taking place, I wanted to take a look at how geospatial is adopting generative AI and other AI technologies.

Here are a few of the early adopters of generative AI in GIS:

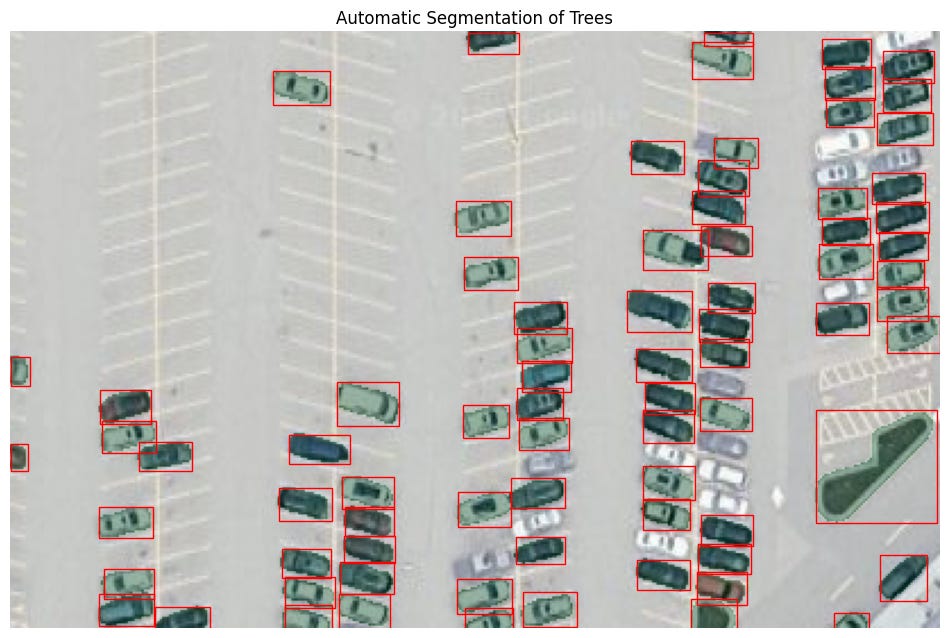

The Python package segment-geospatial use the Segment Anything model which uses text inputs or click actions to select different parts of an image and clip them out. While the general model focuses on any image, Dr. Qiusheng Wu took it and applied it to satellite imagery. Here is an output from one of the example notebooks that I used to apply to car counts.

At CARTO we are using large language models to label data to make it more readable. In the video, we used multiple layers of data to analyze urban heat islands to create a risk index. While it is easy to see the index, the underlying data is abstracted away, but when the data is passed to the LLM it can label all the individual attributes that go into the model.

Using AI models to label imagery and automate cartography is covered in the Practical AI podcast (I have been listening to this a lot to get a better understanding of the AI space) featuring Gabriel Ortiz from Gobierno de Cantabria. This has some great examples on labeling imagery and more recently automating cartography from imagery.

Using AI to model traffic and environmental exposure was the focus of a talk by Dr. Song Gao of the Geospatial Data Science Lab at the University of Wisconsin-Madison.

A new application that allows users to ask questions about carbon management with simple text prompts called Carbon GPT is in waitlist mode, but has some

MotherDuck, which is in beta, added generative AI abilities to DuckDB to generate SQL queries using text prompts. Faraday.ai has a similar post about using LLMs in BigQuery to label text for sentiment analysis in SQL.

Geospatial @ Coursera

Check out some of my geo-focused favorites from Coursera:

Geographic Information Systems (GIS) Specialization from UC Davis:

Spatial Data Science and Applications from Yonsei University:

Python for Everybody from University of Michigan

Remote Sensing Image Acquisition, Analysis and Applications

What do GPUs have to do with this

These models require a lot of computing power. Graphics processing units or GPUs are the hardware that power these models. Even the example notebooks in segment-geospatial recommend using the GPU accelerators in Google Colab.

In a recent post, Tom’s Hardware estimates that future versions of ChatGPT will require 30,000 Nvidia A100 GPUs to run:

The research firm estimates that OpenAI's ChatGPT will eventually need over 30,000 Nvidia graphics cards. Thankfully, gamers have nothing to be concerned about, as ChatGPT won't touch the best graphics cards for gaming but rather tap into Nvidia's compute accelerators, such as the A100.

This could translate into $3 million in revenue for Nvidia. These GPUs cost between $10-15k, and that translates into a lot of revenue for the company. The increase in interest in generative AI and the increase in Nvidia’s stock price seem to be closely linked.

GPUs are also critical to geospatial, particularly earth observation. This matters to geospatial especially when dealing with large amounts of imagery. While GPU-accelerated databases are getting outpaced by data warehouses and other systems like DuckDB, GPUs still play an important role in imagery and remote sensing. Knowing when and how to deploy them will become more commonplace in the near future.

Where is generative AI going with geospatial

It is still early days. We still have to see how this plays out but I think there are four key steps that you can use to start.

Use generative AI to write, check, and learn new code. This is an easy place to start as it has low risk (bad code just won’t work) but can help speed up work.

The next step is to use large language models to retrieve data based on text inputs. This can translate into writing SQL or other code to retrieve data.

As seen in the video above, you can use LLMs to label your data and make it far easier to understand for

The final phase will let more users interact with geospatial data. Imagine a dashboard or analyzed data where a user can ask a question and get results back, in text form and on a map. The world is already used to doing similar things with Google Maps (find restaurants near me), so making the leap to use this in spatial analysis isn’t a big one. People generally know what they want to understand (what is the long-term climate risk near my house), but the barrier to entry to doing this on a map is much higher. LLMs help break down those barriers.

Finally, Abdishakur Hassan shared some great thoughts here in a recent Medium post which I highly recommend checking out.

Thanks!

If you have something you want to share or a job you want to promote you can e-mail me here. Or if you want to sponsor a newsletter, video, or post, check out this link to get in touch.